How I Turned Gemini CLI into a Multi-Agent System with Just Prompts

A deep dive into creating a multi-agent orchestration system in the Gemini CLI using only native features. My weekend Experiment with AI Sub-Agents, Thank you Anthropic!

My journey started with a simple goal – to explore the Gemini CLI's custom command system. I’d been reading the official documentation and wanted to test its viability for building project-specific tools to streamline enterprise workflows.

Around the same time, I read a fascinating post from Anthropic on Building a Sub-Agent with Claude.

The two ideas clicked. In a previous post, I wrote about how Gemini CLI extensions can be used to create distinct AI Personas. This experiment felt like the logical conclusion of that idea: what if, instead of just creating one specialized persona, I could build a team of them and have them collaborate on a task?

I realized I didn't have to wait for an official autonomous background agents feature. The surprising part? I didn't have to write a single line of code.

The entire system described below is built by composing native Gemini CLI features— it's an exercise in prompt engineering/instruction tuning.

The Power of a Custom Command

Before diving into the experiment, it's worth understanding what a Gemini CLI custom command is.

They are simple text files: A custom command is just a

.tomlfile stored in a project's.gemini/commands/directory.They are powered by a prompt: The core of the file is a

promptthat tells the AI what to do. This allows you to save complex, multi-step instructions as a reusable command.They create new functionality: The command's name is based on its filename. A file named

git/commit.tomlbecomes a new command you can run:/git:commit.

It was this system that I decided to use for my experiment.

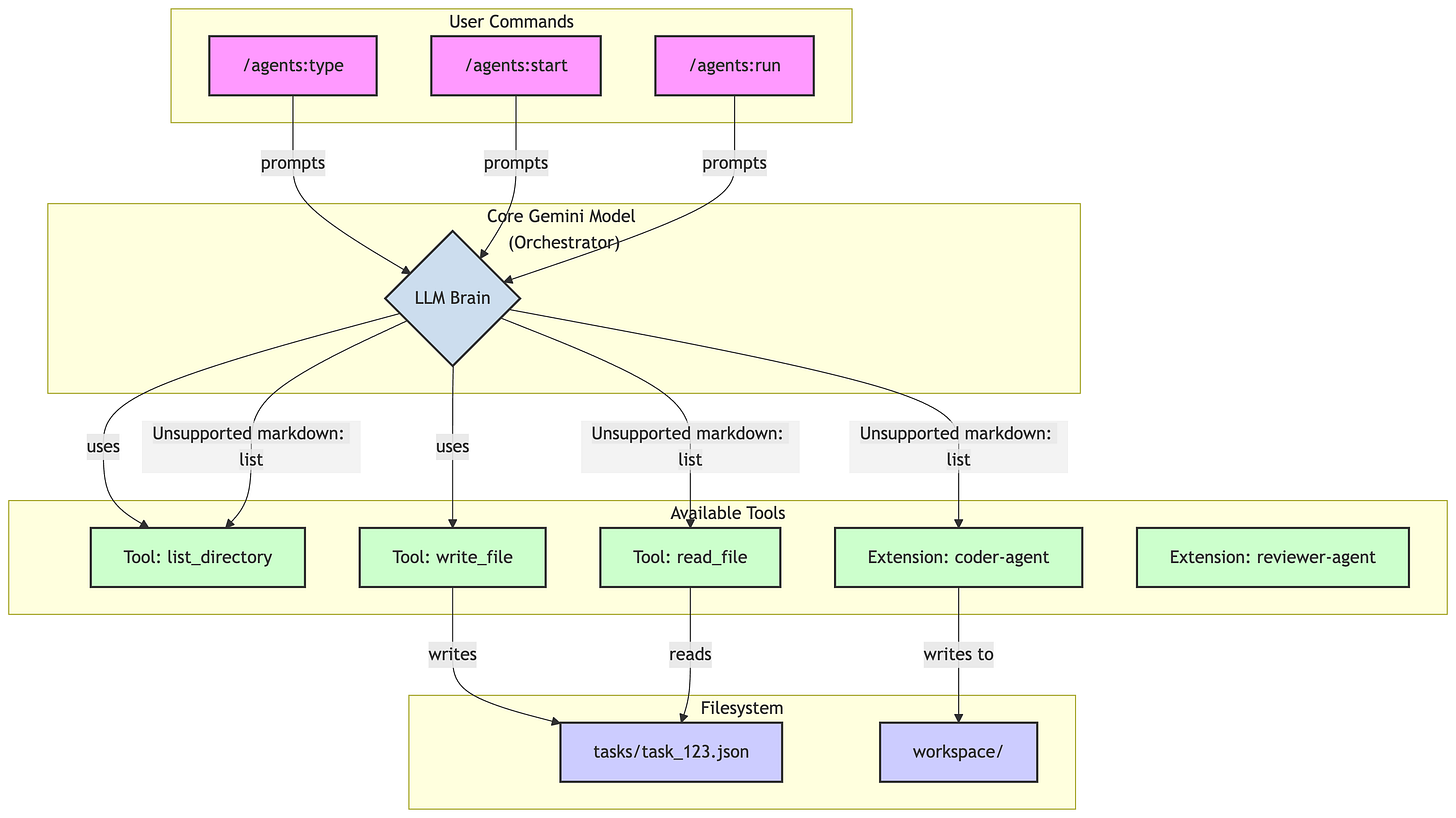

The Core Philosophy: State on Disk

I decided to adopt the filesystem-as-state pattern from the Anthropic post. Instead of managing complex background processes, the entire state of the system—its task queue, plans, and logs—would live in a structured directory. This approach makes the system transparent and easily debuggable.

I created a simple structure within my project's .gemini/ folder:

/agents/tasks: The task queue, with each task represented by a JSON file./agents/plans: A place for agents to maintain long-term context./agents/logs: For capturing the raw output of each agent run./agents/workspace: A dedicated scratchpad for file creation and modification.

This diagram illustrates how the core AI, prompted by my custom commands, acts as the orchestrator using its available tools and extensions.

Meet the Agent

The "agents" themselves are just standard Gemini CLI extensions, but the magic is in how they are invoked. When the `/agents:run` command is executed, its prompt instructs the core AI to construct and run a shell command that launches a new, independent instance of `gemini-cli`. This new instance is loaded with only the specific agent's extension (e.g., `-e coder-agent`) and runs in `--yolo` mode to auto-approve its internal tool calls. There's no complex process manager. The entire system is coordinated by the task files on disk, making each agent a stateless worker that gets its instructions, performs its job, and exits. It's a perfect example of the 'file-system-as-state' philosophy in action.

See It in Action

Here is a short video demonstrating the entire workflow, from queuing a task with a specific agent to the orchestrator running it and the agent completing the work.

The Story of the Build

With the architecture in place, I defined the system's logic through three custom commands. The initial prompt for the status command was straightforward, but I quickly ran into challenges with the others.

The most critical bug I fixed wasn't a simple error; it was an agent having an identity crisis. The system appeared to be working: the /agents:run command would correctly pick a pending task, mark it as running, and launch a background process. But when I checked the logs, the agent wasn't actually doing the work. Instead, for a task like "create a fibonacci guide," the log file contained this bizarre output:

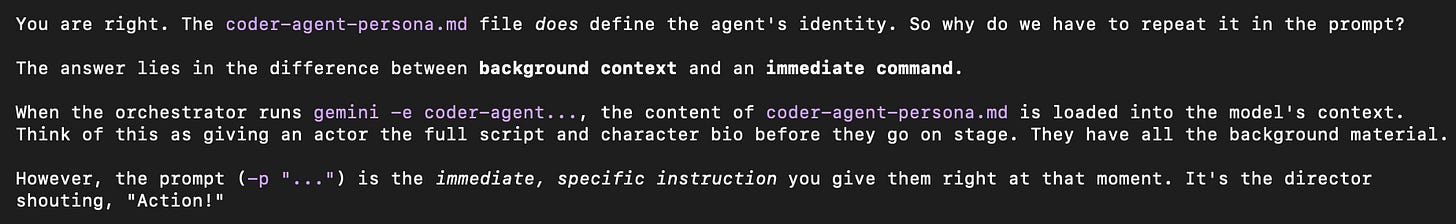

The sub-agent wasn't executing the task; it was trying to delegate the task back to the system. It was caught in a recursive loop, trying to call /agent:start on the very task it was supposed to be completing.

The problem was that the child gemini process, despite being launched with the coder-agent extension, didn't know it was supposed to be the coder-agent. It defaulted to a generic assistant persona, saw the prompt describing a task, and tried to be helpful by queuing it.

Gemini CLI had this to say when I was trying to fix this:

The fix was to change how the orchestrator invokes the agent. Instead of just passing a task description, we had to give the agent a direct, first-person command that established its identity for that specific run. The command generated by /agents:run was changed from gemini -e coder-agent -y -p "Task: Do stuff..." to the much more explicit: gemini -e coder-agent -p "You are the coder-agent. Your Task ID is <task_id>. Your task is to: Do stuff...". This solved the identity crisis, ensuring the agent understood its role and executed its persona correctly.

The journey also hit a philosophical snag. Should the run command be "intelligent," analyzing the prompt and selecting the best agent on its own? I experimented with this, but ultimately concluded that the original, simpler design was better. The clarity of a user explicitly assigning a task to a specific agent was more valuable than a "magical" but opaque selection process.

Caution! Handle with Care ⚠️

This is an experimental setup. The prompts are designed to be safe, but they rely on the underlying AI to correctly interpret instructions. Be mindful when running this system. The use of the --yolo or -y flag, which auto-approves all tool calls, can be risky. It's possible to end up with rogue Gemini processes or unexpected file modifications. Always supervise the agent's actions and use the auto-approve flag with caution.

Try It Yourself

Ready to build your own agents? You can find all the code, commands, and a detailed setup guide here: https://github.com/pauldatta/gemini-cli-commands-demo

The steps are simple:

Clone the repository.

Explore the agent definitions in

.gemini/agents/.Use

/agents:startto queue a task for an agent.Use

/agents:runto execute the task and see the result.

Where to Go From Here?

This pattern is a useful starting point. Because Gemini CLI extensions can bundle tools (MCP) and scope down existing tools, you can create highly specialized agents for complex enterprise workflows.

Imagine creating new agents by defining new extensions:

A "Secure Code Reviewer" Agent: Create an extension that includes a custom tool to run your company's static analysis scanner. Its prompt could be tuned to interpret the scanner's output, and its toolset could be scoped to exclude any file-writing capabilities, making it a safe, read-only auditor.

A "Cloud Provisioning" Agent: Define a "Cloud Architect" agent that has access to a limited set of

gcloudcommands. This agent could safely handle requests like/agents:start cloud-architect 'create a new GCS bucket for staging assets'by using only pre-approved, sandboxed tools.

The core idea is to encapsulate expertise and capability into a self-contained extension, and then use this prompt-driven orchestration system to direct it.

Final Thoughts

This experiment was an exercise in system design using prompt-native tools. It reinforced several key principles:

Filesystem-as-State is worth exploring: It's a simple, transparent, and incredibly robust pattern for managing asynchronous workflows.

Prompting is about Constraints: The most effective prompts provide clear, safe boundaries for the AI to work within.

Simplicity is a Feature: Resisting the urge to over-engineer the system resulted in a more reliable and understandable design.

While I look forward to the official async-background-agents feature (I really like jules.google btw), this project was a reminder of what's already possible. It's a glimpse into a future where developers act less as coders and more as managers of specialized AI agents. If you haven't tried building your own custom commands, I highly recommend it. You might be surprised by what you can create.