My AI Code Reviewer Needed a Project, So I Vibe-Coded One for It

This post details my journey of building a custom AI code reviewer with gemini-cli. It then dives into the art of crafting effective prompts for the AI, using Google's own engineering guides.

I started building a script that uses Gemini-CLI to review code and ran into a practical issue: I needed code to review to test my reviewer. And not just a snippet—I needed a real project with pull requests to see if it would actually work. I decided to 'vibe-code' a simple front-end application, giving my AI reviewer its very first assignment.

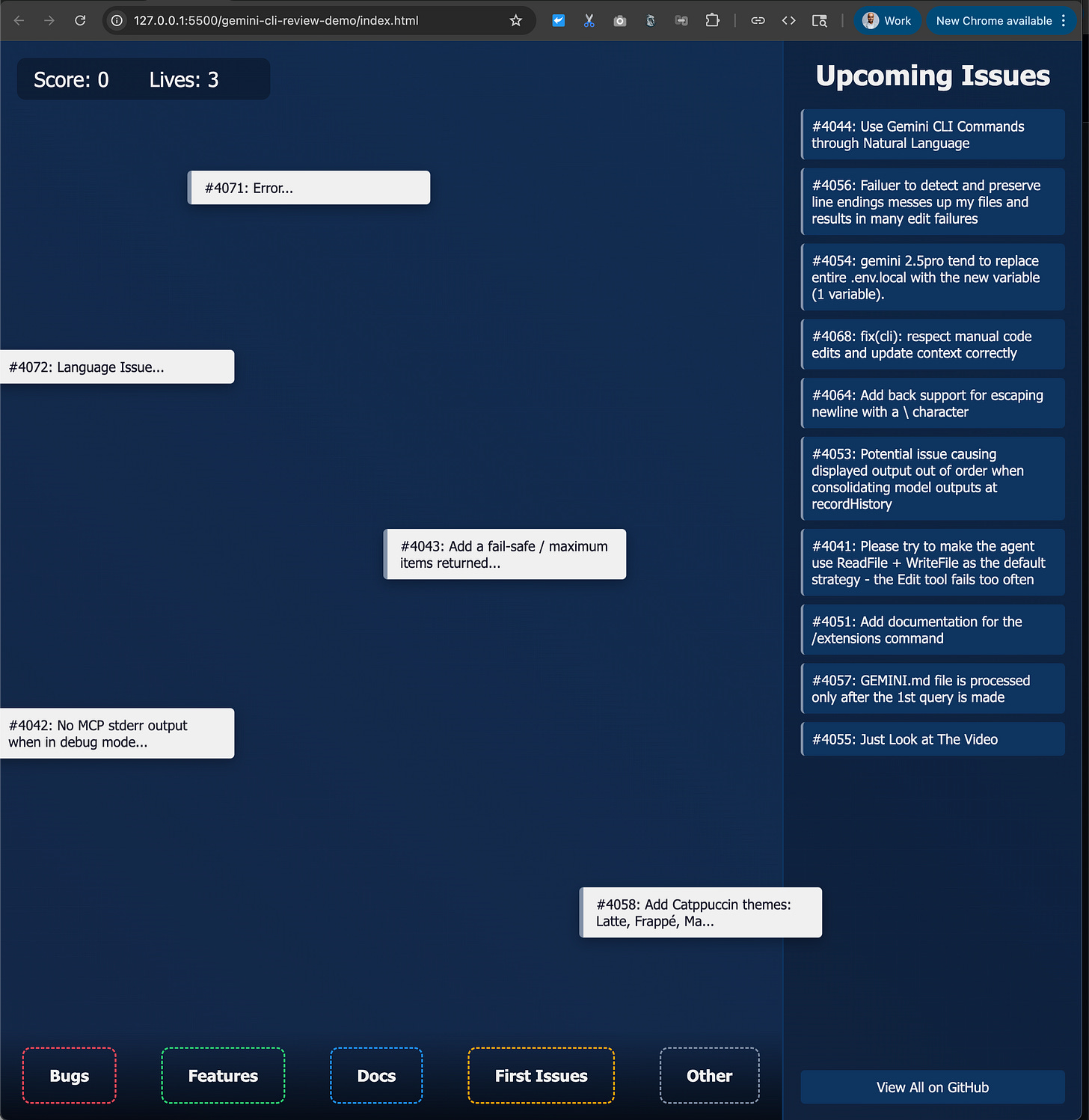

That's what led to this little issue triage app. It's a web app that pulls and displays open issues from the official gemini-cli GitHub repository, and it became a testbed for my experiment.

You can see the live demo of the final app here: Live Issue Triage App - it's a little (very?) broken, please submit PRs...

The Official Way vs. The DIY Deep Dive

Now, before we go further, it's important to know that Google is on the case. The official Gemini Code Assist app for GitHub and gemini-cli-action are available. You can read the official announcement blog to see how it integrates directly into the developer workflow.

They provide a powerful, integrated experience right out of the box, with documentation on reviewing code with Gemini.

So why build your own?

Because understanding how to build your own automation is an incredibly valuable skill. It gives you the flexibility to tailor the process to your exact needs and opens your eyes to the endless possibilities of Gemini Cli.

Our Custom Workflow: A Look Under the Hood

We're going to create a GitHub Action that's triggered on every pull request. Here's the plan:

Gather Rich Context: We'll send the

diffand the full content of every changed file to give the AI the complete picture.Engineer a Prompt: We'll craft a prompt that tells Gemini exactly how to behave and what to look for.

Run the CLI: We'll pipe all of this into

gemini cli.Deliver the Feedback: We'll post the code review directly to the PR.

Here are the components that make it happen.

The Art of the Prompt: Guiding Your AI Reviewer

The quality of the AI's review is directly proportional to the quality of your prompt. A specific, well-structured prompt results in a focused, actionable review.

For a great foundation, check out Google's Introduction to prompt design.

For code reviews, we can go even deeper by modeling our prompt on how expert human reviewers operate. Google's own Engineering Practices documentation provides two essential guides that we can use to teach our AI:

What to look for in a code review: This is the checklist of what to examine.

The Standard of Code Review: This is the philosophy—the how and why of a good review.

By combining these resources, we can create a truly useful prompt:

Assign a Persona and Philosophy: Instead of just "expert reviewer," we can now say, "You are an expert code reviewer who adheres to the Google Engineering standard of code review. Your primary goal is to ensure that every pull request improves the overall health of the codebase." This sets a high bar.

Provide Rich Context: Our script already provides the diff and full files. This is a critical step that gives the AI the context it needs to make informed judgments.

Set Guidelines Based on a High Standard: We can translate the principles from the guides directly into our prompt's instructions:

Net Improvement: "First, determine if this change is a net improvement to the codebase. Even if it's not perfect, does it move the code in the right direction?"

Differentiate Feedback: "Clearly separate your feedback into 'Mandatory Changes' (for things that violate style guides or introduce bugs) and 'Optional Suggestions' (for areas that could be improved but are not blockers). This respects the author's ownership."

Check for Consistency: "Ensure the changes are consistent with the surrounding code's style and patterns."

The Checklist: Incorporate the "what to look for" items: Design, Functionality, Complexity, Tests, Naming, Comments, and Style.

Define a Clear Output Format: A structured format with clear sections (e.g.,

### Net Improvement Analysis,### Mandatory Changes,### Optional Suggestions) makes the review easy to parse.

By grounding our prompt in these proven engineering practices, we can elevate the AI from a simple syntax checker to a genuinely insightful review assistant that understands the philosophy of good code review.

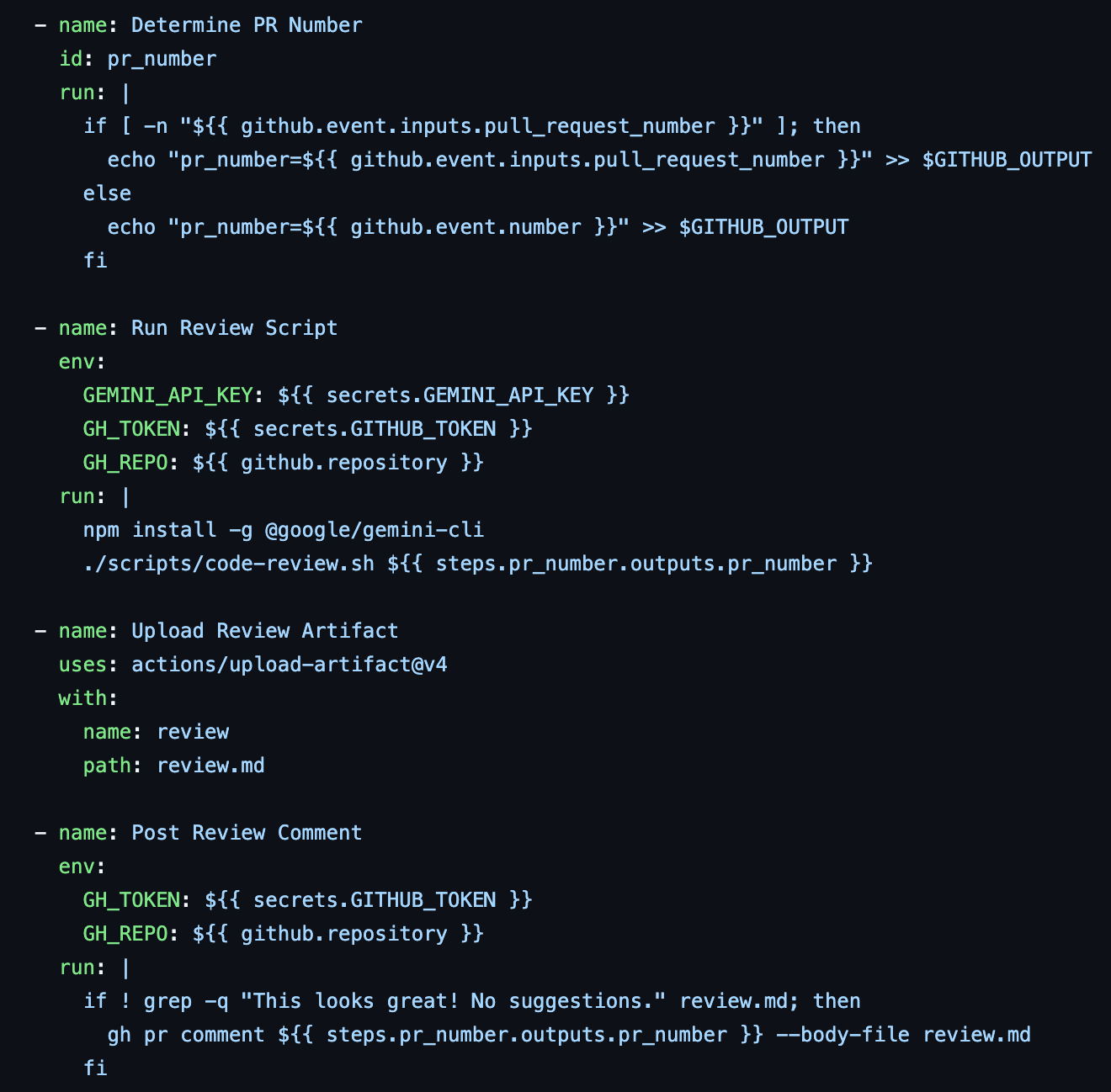

The Workflow File: .github/workflows/code-review.yml

This file orchestrates the process. It defines the triggers and steps, calling our review script at the right moment. You can view the full file on GitHub, but the core of the logic is handled by the script it calls.

The Engine: scripts/code-review.sh

This script is the core of our custom automation. It gathers all the context—the diff and the full files—and packages it up for Gemini.

Here is the exact prompt I used for this experiment. It's a great starting point, and you can see how you could evolve it using the principles we just discussed:

You are an expert code reviewer. Your task is to provide a detailed review of this pull request.

**IMPORTANT:** You have been provided with a complete "context packet" that includes both the pull request diff and the full content of every changed file. Rely *only* on this context to perform your review.

**Review Guidelines:**

- Focus on code quality, security, performance, and maintainability.

- For this project, also consider the gameplay and user experience.

- Provide specific, constructive feedback.

**Output Format:**

Please structure your feedback using this exact markdown format. If you have no feedback for a section, omit it.

---

###

*(Feedback on the user experience, game feel, and fun factor.)*

###

*(Specific suggestions for improvement, including code snippets if helpful.)*

- **File:** `path/to/file.js:line` - Your detailed suggestion.

###

*(Point out things you liked about the PR!)*

---

If you have no suggestions at all, respond with the single phrase: "This looks great! No suggestions."

Here is the context packet:

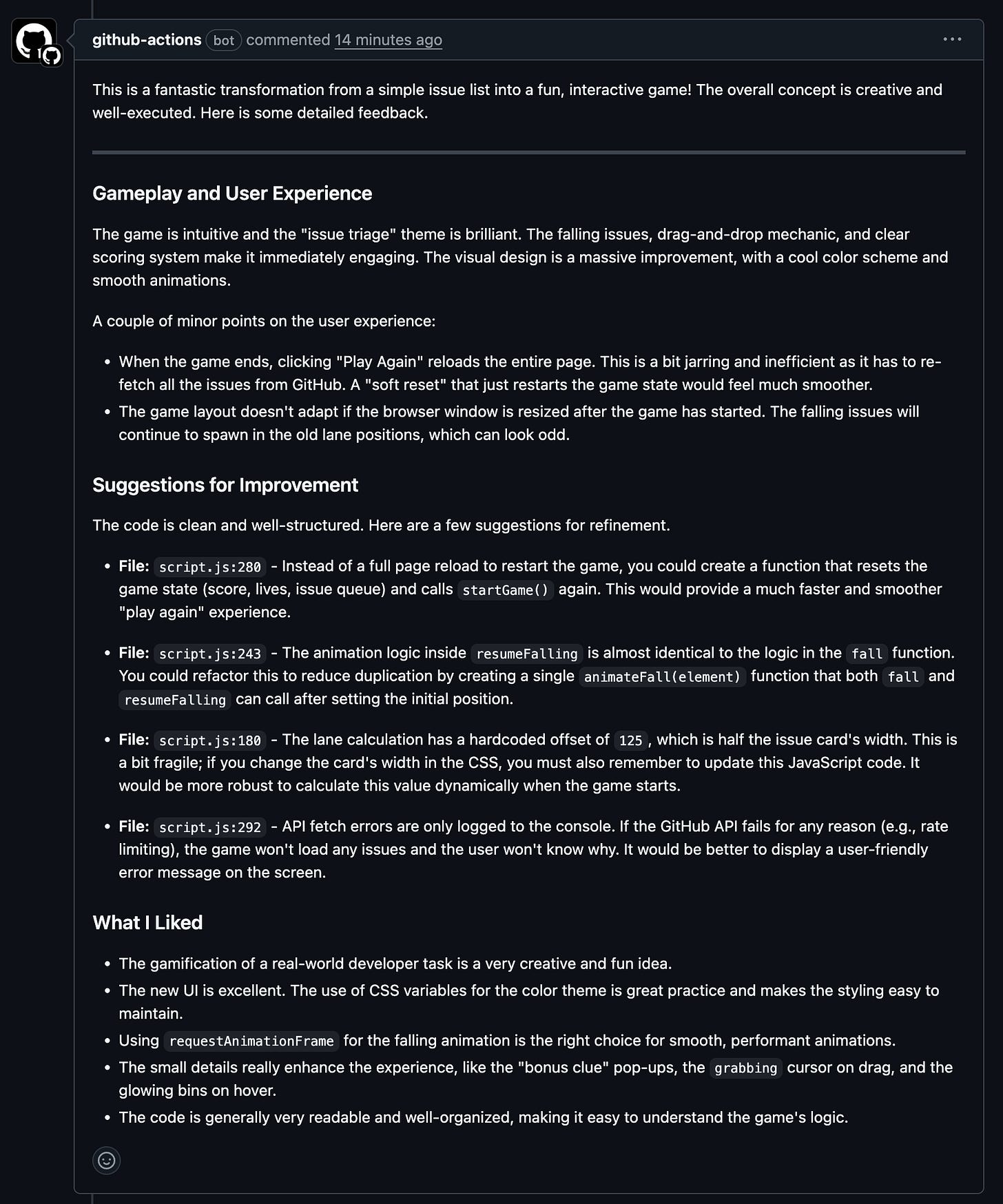

The Result

Gemini Cli provided a genuinely useful first pass, catching things one might have missed and offering constructive suggestions. It felt less like a tool and more like a collaborator. You can see the AI's actual review in action in Pull Request #2.

A Case Study in Code Reviews

The way you ask the CLI to perform a task can have a dramatic impact on the quality and style of the output.

An example of this can be found in this folder, which explores different "personas" for automated code reviews. By iterating on the prompt used to review the same code changes, the author was able to generate a variety of code reviews, each with a different focus and style.

Here's a breakdown of the different prompts and the resulting reviews:

Prompt 1: The Google Engineer: This prompt asks for a review that adheres to the standards of Google's engineering practices. A key phrase in the prompt is: "Critique the code as if you were a senior engineer at Google."

The resulting review is meticulous, with a focus on code quality, style, and best practices, and it even suggests future improvements.

Prompt 2: The Meticulous Reviewer: This prompt asks for a line-by-line review of the code, with a focus on finding potential bugs and edge cases. The prompt states: "I want you to be meticulous and check every single detail."

The resulting review is detailed, with a number of specific suggestions for improvement and a focus on correctness.

Prompt 3: The Senior Engineer/Mentor: This prompt asks for a high-level review of the code, with a focus on the overall architecture and design. The prompt specifies: "act as a senior engineer and mentor... ask questions to provoke thought."

The resulting review is more conceptual, with a focus on the long-term maintainability of the code and a number of questions to guide the developer.

Prompt 4: The Efficient/High-Impact Reviewer: This prompt asks for a review that focuses on the most important issues, with a focus on getting the code shipped quickly. The prompt says: "focus only on the most critical issues... the goal is to ship quickly."

The resulting review is more concise, with a focus on the most critical issues and a more direct tone.

By investing a little time in prompt design, you can unlock a whole new level of productivity and get the exact output you're looking for.

What's Next?

Code review is one powerful use case, but it opens up more questions. In a future post, I'm planning to get more experimental and explore the --yolo flag in Gemini Cli. The idea is to see if the AI can move beyond just suggesting fixes to applying them directly.

My goal here is to share my experience and hopefully spark some ideas about your own workflows. How can Gemini Cli fit into your development process? Fascinating isn’t it?

Love the hands-on approach here. Building your own reviewer forces you to ask: where does the tool add value, and where does human muscle still matter?